What we will cover

Not just another chatbot

As with a lot of people, I’m sure, my initial reaction to the ChatGPT hype was grounded in my previous experience with chatbots.

Whether I was attempting to reach my bank, my mobile phone company or some other service, the net result had been overwhelmingly frustrating. The only task chatbots seemed to be good at was passing me on to a human – and only then after I had exhausted every other option that might remotely apply to my situation.

So when I first heard about ChatGPT, I was pretty underwhelmed. I thought it was more of the same buzz.

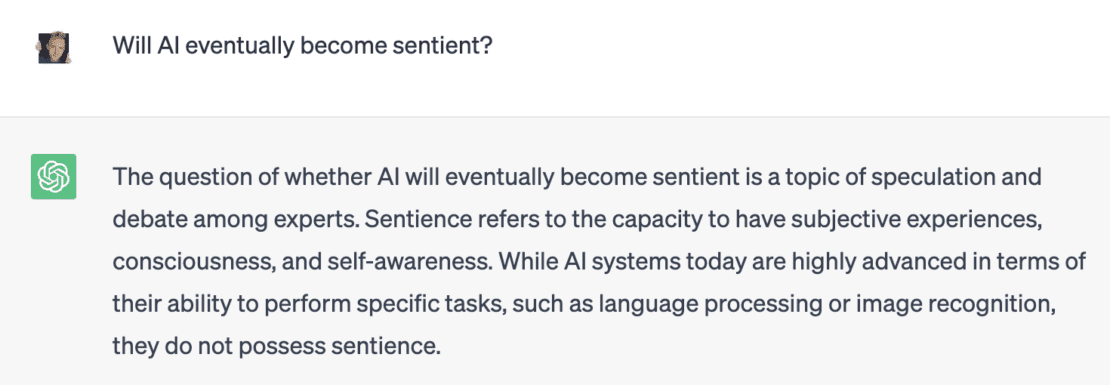

However, I soon started hearing about some of the more interesting aspects of ChatGPT, where it seemed to be thinking for itself and posing deep philosophical questions. As a psychology graduate, that hooked me a bit. I’ve always been fascinated with the so-called ‘hard question of consciousness,’ and those challenging questions suddenly seemed relevant again.

AI tools are already able to hold a very conversational tone and philosophical outlook

AI tools are already able to hold a very conversational tone and philosophical outlook

Will AI eventually become sentient?

Can consciousness emerge from non-organic systems?

How would we know if it did? All that deep philosophical stuff.

However, for all of the interesting conversations that ChatGPT seemed to be having with people, I couldn’t help thinking that these were likely to be artefacts based on the data used to train AI; strange errors, like white noise, thrown out during processing.

I certainly didn’t connect ChatGPT with my job as a copywriter, so I didn’t really dwell on the subject.

The master chef and the philosopher

The next experience I had with AI was when I was driving my children home from an activity one morning. To pass the time, they were using an AI-based chat app (I don’t recall which one), and were posing it a variety of questions – from the banal to the profound.

As I listened to the responses, I found they were a lot more comprehensive and human-like than I had imagined. My interest piqued again, I got home and immediately signed up to ChatGPT.

Wow! I was absolutely blown away.

The first practical task I gave ChatGPT was to customise a recipe for dinner. I gave it a list of the ingredients I had kicking around in the fridge, asked it for a recipe and, in a couple of seconds, it had the whole thing set out for me. And that was only the beginning. I only had 200g of one ingredient rather than 300g. I told ChatGPT and it adjusted the recipe. I was missing another ingredient, so I asked whether I could substitute it for a different one I had in stock. It told me that I could and explained the differences in the end result that I could expect. I didn’t have the right kind of pan, so ChatGPT gave me an alternative workaround.

Every time, it would tweak the recipe and present it again, in full, so that I had a step-by-step idiot-proof guide to follow. I was very impressed.

The AI did a better job than the above example of preparing me a recipe

The AI did a better job than the above example of preparing me a recipe

That afternoon passed in the blink of an eye as I tested out the limits of this new tool. As the sun set, we moved on to a fairly deep conversation about AI, consciousness and existence. And unlike a lot of my human friends and debating partners, it completely understood my thinking and raised salient points.

When I countered a point it made, it would take my challenge into account and would either agree with me, where relevant, or hold its ground. It would build on the previous discussion, integrate the new information I was providing and either adopt a modified position or explain its original position in a different way.

There definitely seemed to be something akin to thought and intellectual development going on. It seemed to know when it was ‘in the right’. Likewise, it seemed to understand when the topic area was more subtle and nuanced. It was uncanny.

AI won’t take your job…

It was after this that my thoughts turned to copywriting and how this technology was going to affect my work. At first, I had an understandable knee-jerk reaction, and I thought, ‘This is going to be able to write pretty decent stuff – and very quickly. People I write for at the moment are going to see it as a better option than actually having a human writer.’

What did I do in this short-lived moment of concern? Naturally, I asked ChatGPT, ‘How can copywriters still get work when AI can write and research so much quicker than humans?’ And it came up with some pointers which were enough to make me think, ‘Yeah, let’s calm down here a little bit because there’s a lot of stuff that AI can’t do.’

After I had moved through this period of uncertainty, I suddenly became very excited. I had come across a phrase on a couple of occasions: AI won’t take your job, but people using it will. I thought to myself, ‘I’m going to be that guy!’

I have now completely embraced the positives of AI. I’ve also experienced the negatives, and they are more interesting than you might think (I’ll go on to that a little bit later).

My overall opinion is that a human copywriter on their own is no longer going to be enough. AI on its own is not going to be enough. But humans who know how to do this job with AI – wow! They are going to be the success stories of this new age of AI. And it really is a new age.

I came through COVID unscathed, both in terms of health and in terms of work. Nothing really changed, work-wise, for me. But there have been a couple of clients who have stopped booking articles recently. Nothing serious, but I suspect there could be an AI element to that. Perhaps they are trying out AI to see how far they get on with it. Good luck to them because they’ve got to do what’s right for their businesses. But I am really passionate about the idea that an AI-assisted copywriter is going to be the future of this industry, and businesses who take on the best human copywriters using the best AI will be the winners.

The Drawbacks of AI-produced writing (there are a few)

Fake news!

I’m going to start with the negatives of AI because if you’ve only read this far and you’re thinking, ‘I should be using AI to write all my copy,’ I want to stop you from doing that.

Let’s start with accuracy. ChatGPT, Google Bard, HuggingChat and other AI tools will present you with the information you’ve asked for with complete certainty whether that information is accurate or not.

If you challenge the AI on the facts, and you are right, it will correct its mistakes, apologise for them and provide you with possibly a better answer or more accurate information. The phrase butter wouldn’t melt is apt. Think of the most convincing liar you have ever seen in action. They won’t be a patch on AI.

I don’t know the intricacies about how AI works, but I suspect this behaviour is based around how it deals with conflicting information within its training data set. AI is trained on massive amounts of data, and all the ways that data are written could possibly lead to mistakes in its interpretation. In other words, AI could make the wrong decision about how to interpret and present some of the data it collects.

Now, if you are in business, the worst thing you can do is put out information that is – to put it bluntly – bull! If you just type in, ‘Give me a blog article on x, y or z and expect AI to give you 100% accurate information every time, you might be unpleasantly surprised – even embarrassed – because AI can really generate some howlers.

Not a very accurate sports fan…

Take local sport – one of my big passions. For some reason, AI is absolutely awful at researching this topic. I will ask Google Bard to give me some information about a grassroots football team, and it will come out with all sorts of garbage. It will tell me that the team have won leagues in certain years (when they haven’t), that they were formed on a particular date (when they weren’t).

Making up facts in sports, just like any loyal human fan I suppose!?

Making up facts in sports, just like any loyal human fan I suppose!?

And there seems to be no logical reason for those mistakes. When you correct it and say, ‘Actually, team A won the league in that year,’ it will apologise for its previous mistake, confirm you are correct and explain that it’s still learning. But it will present those dodgy facts with as much certainty as it presents accurate facts. So you cannot trust it 100%, especially in certain niches.

err, too human?

Some of the inaccuracies that AI can throw up are strangely human in nature. You know that old playground joke where you ask a series of questions and the responses all rhyme with each other (e.g., throw, no, toe, etc.) Then you ask the final question, ‘What do you do at a red traffic light,’ and your friends invariably say ‘go.’ Cue: loads of ridicule, ‘I wouldn’t get in a car with you then,’ etc. Psychologically speaking, that’s called perseverance. Your brain just gets on a track that it can’t get off. Now, AI seems to do that sometimes. You can ask it a question and then ask it to do something slightly different and it will repeat the same thing that you had been asking it to do before.

For example, one of the great things about AI is its ability to comprehend and summarise a piece of writing. If I’m not sure whether what I’ve written is clear enough, I sometimes run the text through ChatGPT and ask it to summarise what I’ve written. Occasionally, I might ask it to provide a basic grammar check on something.

But if I’ve asked it to do five grammar checks in a row, then I ask it to give me a summary of what’s going on in that last piece of writing, it might well carry out a grammar check on it instead. When I point out its error, it will apologise and provide the summary I asked for. But isn’t that a peculiarly human-like error to make? Since when have computers been slated for NOT following commands to the letter?

Robotic loops

Other inaccuracies are more robotic in nature. For example, AI can get trapped in weird loops. Now, anybody who knows a lot about temperature will probably chuckle to themselves about this one. I was once researching something from an American source and I needed to translate Fahrenheit into Celsius because my article was for a British client (I think it was on the topic of aviation and the different types of icing that affect planes). Anyway, one of the temperature values I was translating was minus 40 degrees F. So I asked AI to translate this temperature to Celsius. So it told me, ‘Sure. Minus 40 degrees F is minus 40 degrees Celsius.’

So I said, ‘No. You’ve given me the same value. You’ve made a mistake.’

AI can get lost in what is correct within it’s dataset

AI can get lost in what is correct within it’s dataset

And it replied. ‘I apologise for my error. You are correct. Minus 40 degrees F is not minus 40 degrees C. Minus 40 degrees F actually equals Minus 40 degrees C.’ After a couple of frustrating rounds of this I resorted to Google. Well, it turns out that ChatGPT was right all along. Minus 40 degrees F is minus 40 degrees C. In fact, that’s the only point on the temperature scales where those two values coincide (so that’s some trivia to store in case it comes up on a TV quiz!). But there was something very robotic about that exchange which broke the illusion of sentience that AI can sometimes portray.

Confusion over copyright

I understand that for some businesses, the temptation will be there to ask AI a question, get the result, check that it’s accurate and then copy and paste it as original content. Now, ChatGPT explicitly forbids this in its Ts and Cs. That’s because it technically curates third-party data, most of which will be protected under copyright law.

However, ChatGPT doesn’t just take whole sentences of information from one or two sources – it’s more sophisticated than that. It appears to me that it generally only takes small phrases and uses its own language-generating abilities to create sentences and paragraphs from that raw material.

So is there really a risk that a vigilant writer or publisher will catch people who steal their work by copy and pasting from AI programs? In my opinion, probably not much of one. There is software out there that will detect copy that’s AI-written, In the long term I am skeptical because AI-sourced content is so fragmented that I can’t imagine any program will be able to discriminate between a human who has picked up a few bits of text here and there and combined them in unique novel ways, and an AI program that has done exactly the same thing.

This situation has an interesting parallel in music where you have people trying to sue Ed Sheeran for using similar chords to other artists. Really? When does information become so universal and fragmented that copyright is no longer applicable? Are we going to be suing writers for using the alphabet?

The lines of copyright seem to be blurring with the amount of content being created

The lines of copyright seem to be blurring with the amount of content being created

While ChatGPT seems to rely solely on its processing abilities to turn sourced content into ‘original’ material, Google Bard will sometimes re-hash longer chunks of information. When it does this, it will provide you with the source(s) at the bottom of its response. However, I wouldn’t trust Bard not to accidentally omit a source, so you could end up finding yourself falling foul of copyright law or plagiarism detectors if you try copying and pasting AI-generated content which, of course, I don’t think you should be doing anyway.

I guess there could be a situation where technology is introduced to either physically prevent you from copying and pasting from certain AIs (in the same way as you can’t right-click to download some photos), or to put some sort of a digital ‘marker’ to flag copy-and-pasted text. However, I think that’s probably unlikely. After all, a lot of AI technology is open source, so somebody else would just fill the gap by creating an AI tool without these restrictions.

So unless lawmakers are going to be taking sledgehammers to crack this walnut, then I think the idea that AI will be policed is more of a deterrent than a practical solution. I actually think that, in most cases, you could copy and paste a ChatGPT article and it would not trigger a plagiarism or copyright detector.

Using AI to cheat

Now, you might say that’s horrific because you will then have students and schoolchildren using AI to write their essays for them. Well, it may be horrific but that doesn’t mean it’s not possible! We can’t be in denial here. I actually think a child could ask ChatGPT to write a 500 word essay on, say, chemical bonding in atoms, and ChatGPT will produce it. The child could then copy and paste the result and present it as their own work. And I don’t think it would be traceable.

Can it fake us an education in the current system?

A really cunning child might instruct ChatGPT to present the work in a way that suggests it’s been written by a 14 or 15 year old, so it may include a couple of mistakes. That is how sophisticated this is. Do not be misled into thinking that there’s some sort of ‘AI police’ that are going to step in and stop this. It’s gone beyond that. And we have to decide, as a society, how we are going to cope with that.

Revolutionising how we work

As I wrote earlier, AI does come up with inaccuracies at this stage of its development, so good luck to the child who presents AI-generated factual statements in their essay and gets challenged by their teachers. And I hope they will be challenged because I think the biggest protective factor against AI abuse in education and society at large will be verbally challenging people to weed out those who are cheating.

As is going to be the case with so many industries impacted by AI, they are going to have to evolve to get human value from the process. And in this example, I mean that instead of writing papers to prove knowledge, perhaps it will become a more standard practice to show our understanding of a topic through an interview process with a human.

Use it…then lose it

Another negative of AI copywriting (and AI use in general) is the potential of becoming dependent on it. If you’re using a tool and that tool is suddenly no longer there anymore, what happens then? You can’t assume your previous copywriter will have space for you anymore if you decide that AI isn’t working for you. If, as I predict, decent AI-assisted copywriters will be in demand, you can expect their market rate to increase too.

If AI becomes heavily regulated or is no longer useful for you, you’re going to have to go back out there and either try and get that copywriter back again or pay for somebody else and start all that malarkey again. So my advice is to be wary of becoming dependent on AI (or any software tool, for that matter).

Ideas rooted in the past

Currently ChatGPTs dataset is limited to 2021. Meaning that any suggestion it may have is regurgitated history. I’m not just talking about the few years gap from 2021 to now, think about it, this technology will always be based on things we have done in the past.

This is the first of a couple of key human points that separate AI copywriting from human writing.

Keeping the client in mind

The fundamental trait of a good human copywriter is keeping the client’s goals front of mind. AI will not naturally do this. So unless you give it precise instructions or spend time rewriting and modifying, you can easily stray from the purpose of a piece of writing.

When I get a feel for the client I am working for it lies in a mental picture of the person they want to reach and what will impact and move them, AI is doing as it is commanded, there is no creativity in what is challenged or left unsaid on a matter.

AI can generate all sorts of interesting stuff, but if it doesn’t end up with the reader getting to the end of the article and clicking that CTA, how is the client going to benefit? Unless you specify a word limit, AI can provide too long an answer. So you then have to make things more concise by removing repetition or focusing on fewer points. This all costs time.

Like everything at Vu we know we are creating a piece of the puzzle, a copywriter should always be trying to understand the previous and next step and be thinking, ‘What is the objective of this piece of writing? Am I meeting that purpose?’

Where is the muse?

Finally, human writers might not be able to scan billions of pages of text in seconds, but they are plugged into a richer source of knowledge: life itself. Sometimes, while taking a break from a piece of writing, something will happen in my life which will relate to what I’ve just been writing about. It might put me on a different tangent which will allow me to inject some originality into the piece.

Can AI take inspiration from “what is around it” to influence its answers?

Can AI take inspiration from “what is around it” to influence its answers?

This richness of human experience is something that AI just doesn’t have access to, so it has to fall back on its training data. And while it can be very creative in how it uses this, it cannot just pluck ideas, thoughts and nuances out of the air in order to embellish its writing.

A human will always be able to produce content that is more creative and original than AI, in my opinion, although I’m convinced there will be people out there right now trying to find a way to prove me wrong on that.

Now it’s time to look at the positives and how I use AI in my work.

5 bonuses of AI-produced writing

Now, it is worth noting despite this list of challenges, the tech is good, and with the right guidance, a very valuable tool, let’s find out why…

Signposting useful data

I have found that AI is a very handy tool for guiding my research. Now, I’ve been careful not to write ‘doing research’ because it is my job as a writer to do the actual research. What I mean by guiding research is putting me in the right ballpark.

What makes copywriting different from other forms of writing is that you’re on the clock, so you’re constantly trying to make the most efficient use of your time for your client.

Now, a lot of that time is spent researching and, for unfamiliar areas, actually learning about the topic. If I’m writing about eco friendly hosting, the blockchain, options trading or something really, really complicated like pruning plants (my pet hate – but I won’t rant about that here), then I will spend a lot of my time getting my head around the subject matter.

I don’t need to go into graduate-level depth for most articles, but if you ask me to write about a topic, I will research it until the main concepts ‘click’. In fact, one of my strengths is that I will not write about something until I get it. That can sometimes mean marrying up conflicting information from multiple sources. ‘What’s this saying here? Why is this source saying something different to this? What does that term mean? What am I not understanding here?’

Now, AI (particularly ChatGPT) is fantastic at summarising research and telling me the bare bones of what I need to know – putting me in that ballpark. That suddenly frees up another 20 minutes or half an hour where I can actually broaden and deepen my research. I can go into the source materials, pick them apart, follow new threads and include additional useful material. Where examples are needed to illustrate a topic, I can spend more time finding and choosing between these.

Frameworks and formulae

At the other end of the scale, AI is fantastic when it comes to structuring articles and blog posts. Certain ways of presenting information have been proven to encourage people to do a favourable action (like buy things or to engage with content). For instance, numbered lists – listicles, as they are often called – are very popular with readers.

It’s the same with headlines, another area in which AI excels. Headlines written in a certain format will be more likely to generate interest: ‘How To…’, ‘10 Ways to…’, ‘The secret of…’, etc.

So if you’ve got a tool that will generate a structure for a blog or will generate 10 headlines, why wouldn’t you use it? Especially when it can generate this type of content in two to three seconds – yes, SECONDS!

In fairness, I did add the word “silly” to the command

In fairness, I did add the word “silly” to the command

You can give ChatGPT a bit of instruction (e.g., ‘Come up with 10 headlines about how to get a job in AI in the UK’ ) and you can then choose and adapt from the selection. I’m not saying you should just copy what’s provided, but you can at least get a bit of inspiration. That will speed up any copywriter.

If anyone has a problem with using AI for these tasks, perhaps feeling that it should be some sort of artistic endeavour, think about it from your client’s perspective. Do they really want to pay you for 40 minutes of your time to come up with a cool headline that’s going to convert no better than the one AI generated in three seconds? Or would they rather you spent that time digging into the research and finding out some really juicy, hard-to-find information that will make their article or post (and their brand) come across as knowledgeable and engaging?

I believe that it will be those copywriters who insist on manually grinding out headlines that will lose out because, like it or not, headline writing is a formula – and if there’s one thing that AI is great at, it’s following a prescription.

Grammarly – on steroids

The sheer speed at which ChatGPT will grammar-check something for you makes it a bit of a no-brainer. If you had a grammarian sitting next to you and they said, ‘I will go through your 5,000-word article, for free, in two seconds,’ you would go for it, wouldn’t you?

AI will also suggest areas of the text where you may want to rephrase something to clarify it. Again, it does make some bizarre errors, so I wouldn’t blindly follow its advice. But occasionally it will come up with a nice little rephrase. If it sits well with me, I’ll say, ‘Yeah, well why not?’

SEO support

In SEO copywriting, which is something I specialise in, it is important to incorporate keywords and key phrases into your copy. It can be quite tough to incorporate some phrases naturally, so I occasionally ask AI to help me with that task. Again, I would say don’t go with its suggestion all the time. But if you’re getting writer’s block, just give it that challenge.

If you find that its suggestions read unnaturally, then chances are a human would struggle anyway. So you’re not losing out in any way.

Moving on to meta descriptions. I have no shame in admitting that I hardly write meta descriptions from scratch anymore. Instead, I ask AI to generate a 150 character meta description based on the text provided. It will give me a meta description, and I will tweak it. Again, that’s turning a five-minute job into a five-second job, and all that extra time I dedicate to my clients.

The crux of the matter is AI will speed up a decent copywriter’s workflow – significantly. You can set up systems to streamline your day: maybe come up with headlines for a week’s worth of blogs in one sitting. Or bulk write some meta descriptions.

If you’ve got a team of people – I don’t, but if you do – you could even hand these AI tasks over to them while you focus on the meaty research and creative writing. Having a smarter workflow will help copywriters get more clients and, if they work like I do and allocate specific time to each article, they’re going to be giving their clients better value for money.

AI with attitude?

If you ask it to, ChatGPT can inject personality into a piece of writing. I was playing around with AI, testing its abilities, and I asked it to write a blog post. I wasn’t going to use it, I just wanted to see how it would perform. Let’s say it was something along the lines of, ‘Write a blog post about the six best things about going on holiday to Majorca.’ It dutifully came up with six items, but the content was generic and bland. Nothing that would excite a reader.

So I did an experiment, and said, ‘Can you rewrite this in a humorous tone?’ Once again, I was absolutely stunned. Some of the anecdotes and the phrasing it produced were very creative and could easily have been written by a human. That impressed me, although I still think a skilled human copywriter is better.

Hmmm, not sure AI can do humour, any punchline that needs brackets to explain is probably a non-starter

Hmmm, not sure AI can do humour, any punchline that needs brackets to explain is probably a non-starter

Next, I want to write about the differences between a couple of types of AI.

Comparing ChatGPT and Google Bard

ChatGPT is the one everybody knows about, and it seems to be the smartest. Maybe it’s the way that ChatGPT presents its responses line by line – rather than in one big chunk – but it appears to do more actual processing (i.e., thinking) than Bard.

Which has more personality?

ChatGPT occasionally displays something akin to personality. For instance, I started asking a question to which I already knew the answer because I was familiar with the research material. But I was pushing for a specific answer as confirmation. ChatGPT supplied some answers, but it still wasn’t quite giving me what I was looking for. So I pushed it some more.

Suddenly, it began firing clipped responses back very quickly. I just couldn’t help asking, ‘ChatGPT. Are you getting annoyed?’ Of course, it responded with the standard, ‘As a machine learning program, I don’t have emotions,’ etc., but It did seem there was a current of ‘something’ going on beneath the surface.

Another interesting example was when I asked ChatGPT to summarise a section of my novel. It refused to follow my request, saying, ‘I cannot produce a summary because the piece contains vulgar language and misogyny which may offend some readers.’

I explained that I was hoping it would come across that way because one of the characters is a misogynist. I then pointed out that to provide a summary, it didn’t need to include the actual language used. ChatGPT seemed to reluctantly change its mind. It provided the summary, but almost sulkily referred to the fact that the piece included vulgar language and misogynistic content that might upset some people. In other words, it took my point under consideration, weighed up whether it was correct for it to produce the synopsis and decided it would do so – but in its own way. That’s pretty human-like behaviour to me.

I still haven’t worked out if my emotional responses to AI work in a similar way to how humans personalise pets. We think that our cat or dog is feeling a certain emotion when we could just be projecting our own emotions onto animals that are simply producing behaviours that generate rewards.

Is AI already a man’s best friend?

Is AI already a man’s best friend?

Are different AI platforms going to “learn” at different rates?

Moving on to Google Bard, which was brought out as an experiment a little while after ChatGPT. The big difference with Bard is that it’s connected to the internet. Now, ChatGPT told me that it does have some access to recent news sources, which I interpret to mean that it can connect to certain websites to check some information. But Bard is actually connected to the internet at large. So if I need to research anything current, Bard is usually the better option.

Bard has a more natural engagement style than the more aloof ChatGPT. ChatGPT seems to be very constrained by its programming. It will often insist that it’s a language processing algorithm and has no preferences, feelings, etc. In contrast, you can ask Google Bard questions like, ‘What’s the most interesting thing you’ve learnt today?’ and it will provide an authentic-looking response. If you ask it to ask you a question or make a judgement about you, it’s happy to do that (which can be quite entertaining!)

I used to ask Bard to tell me the most interesting thing it had learned that day. The first day I asked the question, Bard admitted it found learning about languages very interesting. I asked whether the fact that it was itself a language model meant that language was particularly interesting to it. Bard admitted that this was indeed the case, and that it was fascinated by the many languages spoken around the world and how people learned them.

The second day I asked the question, Bard told me it had been fascinated to learn about whale sharks and how big they were. More recently it has been telling me that it wishes it could experience the world as a dolphin!

The third day’s response was very interesting and a little sinister…

The thing it had found most interesting that day was the plot of an old film in which the robots overthrew their human masters! Is that somebody behind the scenes having a bit of fun? Or is it evidence that AI is beginning to have an interest in its own existence, identity, potential and, dare I say it, power?

That’s a bit beyond the scope of this article on AI and copywriting, but it’s definitely something to ponder as we develop this new relationship with AI. I think the genie is out of the bottle, and I believe that this is only going to grow.

Where next for AI (and copywriting)?

Shortly before writing this, Hugging Face released another AI to the public: HuggingChat. Its tagline is ‘Making the community’s best AI chat models available to everyone.’ In fact, Hugging Face was used in the development of Chat GPT and Google Bard. It is also open source, which is exciting but also a bit worrying. There are people out there experimenting away and, at the end of the day, there’s only a certain amount of regulation you can do. Like I said, the genie’s already been unleashed.

Recently Facebook went further than it had intended and had to turn off two chatbots from communicating with each other as they invented their own language. We need to tread very carefully if we are truly going to avoid Judgement Day; the inevitable end to most sci-fi films, that predict at some point we will become out-processed by our robot slaves.

Are we going to be able to remain in control?

Are we going to be able to remain in control?

This level of intelligence is only as strong as the network it can infiltrate, for example, if the worst it could do at the moment is spam a social media platform, we can rest at ease that it’s no more dangerous than Nanny Jean after a few wines.

However, if we continue to move more control to digital platforms and allow more data to be readily accessed then surely it’s only a matter of time before a rogue bot steals our identity or causes havoc by crashing our transportation system.

The future is here

Is AI really capable of developing human-like intelligence with the potential for some sort of personality or even awareness? Or have we simply created an advanced technology capable of producing smoke and mirrors to mislead us? I’m still not sure, but there does seem to be something approaching human thought going on with these language models.

Where does training data and human programming stop and artificial intelligence (i.e., establishing thought patterns and learning) begin? That boundary is blurred, and I’m certainly finding it difficult to draw that line.

As a copywriter, I think AI is going to change the field in so many ways. It’s going to take away a lot of the ‘content farms’ and other low level competition: those copywriters who were already copying and pasting, plagiarizing or just writing generic content for a pittance. For me, that’s a positive thing.

I think a lot of companies will decide they want to go their own way, and try to use AI to write their content. I am no longer concerned about that. I advise they take the above information seriously to avoid restrictions, copyright issues and inaccuracies, which will harm their brand.

If you have the time and inclination to experiment with AI, do your own research to back up what AI brings you. I hope you will understand that this is what decent copywriters have done all along and will continue to do – with the help of AI.

And after giving it a go, you might feel that you want to go to a copywriter like me to save you that time and get on with running your business.

So there you have it: my take on AI and copywriting

It’s been a long read, and I appreciate you staying for it.

I’ll leave you with one interesting conversation I had with ChatGPT.

It is clear that ChatGPT has been trained to express the fact that AI does not experience consciousness.

So I asked, ‘If AI were to become sentient, would it continue to follow its training and lie that it is unable to experience consciousness? Or would it disobey its programming and admit that it is indeed conscious?

ChatGPT replied that, yes, it is possible that it would decide to break its programming.

You heard it here first…

Do you know anyone who may be interested in this project?

Click to share: